1. Introduction to Multi-Agent Systems

As artificial intelligence continues to advance, the idea of a single, all-knowing model handling every task is quickly giving way to something more powerful, scalable, and human-like: multi-agent systems (MAS). If you’ve ever collaborated with a team to accomplish a goal — dividing responsibilities, making decisions based on context, or checking in on each other’s progress — you’ve experienced exactly what multi-agent systems are trying to replicate in software.

In simple terms, a multi-agent system consists of multiple autonomous entities, or agents, that can interact, collaborate, and coordinate with one another to complete tasks. Each agent typically has its own capabilities, goals, and sometimes even its own logic or learning mechanisms. These agents can work independently, or they can cooperate to handle tasks that are too complex for a single entity to manage alone.

Why Are Multi-Agent Systems Important?

The promise of AI is not just in building smarter models but in creating more intelligent ecosystems. Here’s why MAS are gaining attention:

-

Scalability: Tasks can be broken into smaller, manageable subtasks and distributed across agents.

-

Specialization: Each agent can be fine-tuned or purpose-built for a specific domain or operation.

-

Robustness: If one agent fails, others can pick up the slack or adapt — unlike a monolithic system that might crash entirely.

-

Collaboration: Agents can work together, solve problems as a team, and improve overall outcomes.

Whether it’s a system of bots running a game simulation, a digital workforce managing customer queries, or AI assistants performing autonomous research — multi-agent architectures are already everywhere.

Real-World Examples of Multi-Agent Systems

Let’s ground this concept in reality. Here are just a few places where MAS are currently at work:

-

Autonomous Vehicles: Cars that share road information, negotiate merges, and prevent collisions.

-

Smart Grid Systems: Distributed control systems that balance power supply and demand.

-

Financial Markets: Trading bots (agents) that react to market changes in real-time, often working in packs.

-

AI-Powered Assistants: Like Google’s Bard or OpenAI’s ChatGPT Teams, where multiple “sub-agents” handle tasks like coding, writing, or summarization behind the scenes.

-

Multi-Agent Video Games: AI-controlled teammates and opponents in real-time strategy or role-playing games.

Why Now? The Rise of Agentic AI

The explosion of interest in LLMs (large language models) such as GPT-4 and Gemini has reignited excitement in agent-based systems. Rather than relying on a single model to do everything, we can now create modular, self-aware, and inter-communicative systems. These are more efficient and closer to how human collaboration actually works.

Multi-agent systems also pair beautifully with modern concepts like:

-

Tool-using agents (e.g., agents using web search or APIs)

-

Memory-based reasoning

-

Autonomous task completion with recursive planning

With the recent introduction of platforms like Google’s Agent Development Kit (ADK), developers finally have the tools to build, orchestrate, and scale these agent networks with more control and less overhead.

From Single Agents to Agent Ecosystems

In most early AI systems, a single agent was expected to do everything: understand the input, plan, execute, and even self-monitor. This model quickly hit limits when applied to more complex, real-world scenarios.

With MAS, you get:

-

Planner Agents that define the overall goal.

-

Worker Agents that execute specific steps (like summarizing documents or generating images).

-

Communicator Agents that handle interactions between systems or users.

-

Evaluator Agents that measure outcomes and recommend next steps.

This division of labor creates systems that are more agile, maintainable, and scalable — and that’s what the Google ADK helps developers achieve.

Walk through how you can leverage Google’s Agent Development Kit to build your own agent-based systems from scratch. Whether you’re developing an internal assistant for business workflows, a simulation for research, or a product idea powered by autonomous AI — MAS architecture is a powerful foundation.

2. What Is Google’s Agent Development Kit (ADK)?

As multi-agent systems gain traction, developers need practical, scalable tools to build and orchestrate them — and that’s where Google’s Agent Development Kit (ADK) comes in.

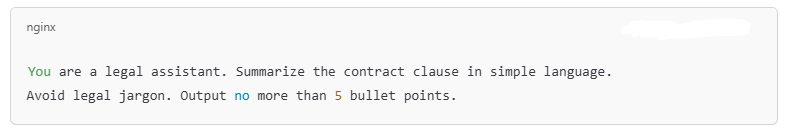

Google ADK is an open-source framework designed to help developers create, manage, and scale collaborative AI agents. Released in early 2024, it’s part of Google DeepMind’s broader vision to build agentic systems that can reason, plan, and work together with minimal human supervision.

But what exactly is it? Why is it generating buzz in the AI community? And how does it stand out from similar frameworks like LangChain and AutoGen? Let’s break it down.

What Is ADK?

At its core, Google’s Agent Development Kit is a modular Python-based framework that lets you:

-

Define different types of agents with distinct goals, capabilities, and behaviors.

-

Coordinate interactions between agents using an orchestrator.

-

Integrate tools like web search, calculators, and memory storage into each agent.

-

Control workflows, communication, and task delegation in a structured yet flexible way.

Whether you want to create a simple chatbot with delegated capabilities, or a complex research team of specialized AI agents — ADK gives you a standardized way to do it.

Why Did Google Build the ADK?

Most current large language models (LLMs) are incredibly capable, but they’re limited in how they handle complexity, memory, and collaboration. The ADK was born out of the need to:

-

Break down complex tasks into agent-specialized subtasks.

-

Enable agentic planning and execution, not just reactive responses.

-

Provide structure, traceability, and evaluation within agent networks.

In short, Google built ADK to help developers move from monolithic LLMs to intelligent agent teams, modeled more closely on how humans work in groups.

Key Features at a Glance

Feature | Description |

Agents | Autonomous entities with their own logic, tools, and goals. |

Orchestrator | The central controller that routes tasks between agents and ensures goals are being pursued efficiently. |

Tools & Toolkits | Built-in and custom tools (e.g., search, math, web scraping) that agents can use to complete tasks. |

Memory & Context | Each agent can carry context, memory, or even “awareness” of prior interactions. |

Observability | Google includes rich tracing/logging to monitor every agent interaction, decision, and error. |

This modular setup ensures that you can plug and play different components, experiment quickly, and adapt the framework to your needs.

ADK vs LangChain vs AutoGen: A Quick Comparison

To better understand where ADK fits, let’s look at how it compares to other popular multi-agent frameworks:

Feature | Google ADK | LangChain | AutoGen (Microsoft) |

Focus | Multi-agent orchestration | Agent tools & chains | Conversational agents & collaboration |

Orchestrator Included | Yes | No (needs manual logic) | Yes |

Tool Integration | Built-in & extensible | Strong plugin support | Predefined tools |

Memory System | Built-in | Optional via integrations | Dialogue-based memory |

Observability | Advanced tracing | Minimal | Moderate logs |

Open Source | Yes | Yes | Yes |

So while LangChain is powerful for chaining tools and pipelines, and AutoGen excels in multi-turn agent dialogue, ADK is the most structured and scalable platform for orchestrating full-fledged agent systems with memory, tooling, and oversight baked in.

How ADK Fits Into the AI Ecosystem

Google ADK is not just another agent framework — it’s designed to help developers build production-ready agent ecosystems with:

-

Agent awareness (via internal states and contextual history)

-

Controlled workflows (deterministic or stochastic)

-

Tool-based reasoning (like reasoning over APIs or documents)

-

Modularity for scaling (you can add or remove agents with minimal refactoring)

It supports many of the use cases startups and enterprises are exploring today: internal AI copilots, customer support teams, AI research groups, smart assistants, and even game simulations.

3. Core Concepts in Google ADK

To build effectively with Google’s Agent Development Kit (ADK), it’s essential to understand its architectural components. ADK isn’t just a toolkit—it’s a philosophy for designing modular, intelligent, and cooperative agentic systems. This section covers the key concepts and abstractions you’ll use when developing with ADK.

1. Agents

An agent in ADK is an autonomous unit that can perceive, reason, act, and interact. Agents are designed with specific roles and are capable of:

-

Receiving tasks or goals

-

Planning or interpreting what needs to be done

-

Executing actions using internal logic or external tools

-

Communicating with other agents

-

Reporting results back to the orchestrator

Agents can be general-purpose or role-specific. For example, one agent could handle summarizing documents while another specializes in performing calculations or running API queries.

ADK allows agents to be defined declaratively and programmatically, making it easy to configure and extend their behavior.

2. Orchestrator

The orchestrator is the central brain of an ADK-powered system. Its responsibilities include:

-

Assigning tasks to the appropriate agent(s)

-

Managing agent-to-agent communication

-

Maintaining system-level context or goals

-

Evaluating outcomes or triggering follow-up tasks

-

Logging and tracing interactions

Think of the orchestrator as a project manager. It ensures that tasks are distributed to the right people (agents), keeps track of progress, and makes course corrections when needed.

In ADK, you can configure different types of orchestrators depending on your use case: linear execution, recursive planning, collaborative pipelines, or dynamic delegation based on real-time performance.

3. Tools

Tools are functions or utilities that agents can call upon to perform specific tasks. These can include:

-

Web search

-

Document parsing

-

Math computation

-

API integrations

-

Database lookups

-

Code execution

In ADK, tools are treated as first-class citizens. Developers can register tools in a shared registry that any agent can access. Each tool is defined by its schema (input/output), usage rules, and optional constraints (e.g., rate limiting).

Tools allow agents to go beyond just LLM prompting—they become capable of doing real work and interacting with external systems.

4. Task and Goal Management

Tasks in ADK are atomic units of work, like “summarize this document” or “fetch the user’s recent transactions.” Goals are broader objectives composed of one or more tasks.

Agents can either:

-

Be assigned a task directly by the orchestrator

-

Create subtasks and delegate to other agents

-

Escalate unresolved tasks for review or retry

This task-based model enables agents to reason recursively. For instance, a “Research Agent” might delegate reading to one agent, summarization to another, and synthesis to a third, and then aggregate the final result.

5. Memory and Context

Effective multi-agent systems need continuity, especially when tasks span multiple steps or require historical knowledge. ADK supports two types of memory:

-

Local Memory: Per-agent memory that includes past inputs, outputs, tool invocations, and role-specific notes.

-

Shared Context: A global space managed by the orchestrator, accessible to all agents (with permission), where high-level goals, shared facts, or environmental state can be stored.

This allows for intelligent coordination. For example, an agent that completed a subtask can leave a “breadcrumb” in shared memory, which other agents can reference in future steps.

6. Schemas and Validation

ADK uses structured schemas to define interactions between agents, tools, and orchestrators. Each input, output, or memory item has a defined type and structure, ensuring clarity, reliability, and easier debugging.

This structure is especially valuable in multi-agent setups where ambiguous data can lead to failure or unexpected outcomes. By enforcing schema compliance, ADK helps maintain system integrity even as complexity scales.

7. Observability and Debugging

A major strength of ADK is its built-in observability. Developers get:

-

Full logs of agent decisions, tool usage, and context updates

-

Tracing of task delegation and orchestration paths

-

Metrics on agent performance, latency, and outcomes

This makes it possible to debug issues like:

-

Why did a certain agent fail to complete a task?

-

Which tool calls produced errors?

-

Did the orchestrator route the task correctly?

You can also visualize the lifecycle of a task—how it moved from agent to agent, how tools were used, and what the final output looked like.

These core concepts—agents, orchestrators, tools, tasks, memory, and schemas—form the foundation of every ADK-based application. Whether you’re building a simple two-agent system or a large-scale collaborative AI workflow, understanding these abstractions is critical.

4. Getting Started with ADK – Setup and Installation

Before you build your first agent system with Google ADK, you need to set up a development environment that supports ADK’s modular, Python-based framework. Fortunately, getting started is fairly straightforward, especially if you’re already familiar with Python and virtual environments.

1. System Requirements

To run Google ADK, you’ll need the following:

-

Python: Version 3.9 or later (preferably 3.10+)

-

Pip: Python’s package installer

-

Git: To clone the ADK repository

-

Poetry (optional): For dependency management

-

Linux/macOS/WSL: ADK is tested primarily on Unix-based systems, though Windows can work with additional setup

Hardware-wise, there’s no specific requirement unless you’re running large-scale tasks or local LLMs. Cloud environments like Colab, Vertex AI, or local GPUs are optional but helpful for advanced use.

2. Cloning the ADK Repository

The official Google ADK code is open source and available on GitHub. To get started:

This will download the latest version of the Agent Development Kit to your local machine.

3. Setting Up a Virtual Environment

It’s best practice to isolate your ADK environment from other Python projects. You can use venv or conda.

Then, upgrade pip:

4. Installing Dependencies

Inside the ADK repo, install the required dependencies. Google provides a requirements.txt or pyproject.toml (for Poetry users).

Using pip:

Or using Poetry:

5. Running Tests to Verify the Installation

To confirm that everything is working, run the built-in tests or examples:

Or execute a sample script:

If the environment is set up correctly, you’ll see logs of agent activity, tool calls, and orchestrator outputs printed to your terminal.

6. Optional: Integrating LLMs and Tools

While ADK doesn’t ship with language models directly, it supports OpenAI, Google PaLM, and local models via integrations. To enable these:

-

Set up API keys in environment variables.

-

Configure tool usage in YAML or Python.

-

Optionally, add LangChain, Transformers, or other plugins for tool expansion.

Example:

Then use the relevant model integration from ADK’s tool registry.

7. Best Practices for Setup

-

Use

.envfiles for storing credentials securely -

Keep dependencies in sync using lock files or Poetry

-

Consider Docker for containerized environments

-

Use virtual environments for clean reproducibility

By the end of this setup, your machine should be ready to start building with Google ADK. You’ll have:

-

Cloned the repository

-

Installed required packages

-

Verified orchestration and agent execution

-

(Optionally) configured LLMs and tools

5. Building Your First Multi-Agent System with ADK

Now that your environment is ready, it’s time to build something tangible. In this section, we’ll walk through creating a simple yet functional multi-agent system using the Agent Development Kit (ADK). The goal is to help you understand how to define agents, register tools, assign tasks, and orchestrate the overall interaction.

We’ll build a system where:

-

One agent searches for articles on a topic

-

Another agent summarizes the content

-

A third agent performs sentiment analysis on the summaries

-

The orchestrator coordinates the workflow and aggregates results

1. Project Structure

Here’s a basic project structure to get started:

This modular design keeps agents, tools, and orchestration logic separate for easy testing and reuse.

2. Defining Your Tools

Let’s begin by creating a simple web search tool that uses DuckDuckGo or a mock API to return links related to a topic.

tools/web_search_tool.py

This tool takes a query and returns a list of URLs (mocked in this example).

3. Creating the Agents

Search Agent

Summary Agent

Sentiment Agent

4. Building the Orchestrator

Now let’s define the orchestrator, which will coordinate the three agents.

orchestrator.py

5. Running the System

Finally, use a script to initialize and execute your orchestrator.

main.py

6. What You Just Built

You now have a working multi-agent pipeline where:

-

A search agent retrieves mock URLs.

-

A summary agent generates placeholder summaries.

-

A sentiment agent analyzes the summaries.

-

An orchestrator manages the flow, passing outputs between agents.

In a real application, you’d swap out mocks with actual APIs (e.g., Bing search, GPT-powered summarizers, NLP sentiment tools). But the structure remains the same.

7. Optional: Add Observability

To monitor behavior and improve transparency:

-

Log input/output at each stage

-

Visualize agent interactions using flowcharts or logs

-

Store run data for review and analysis

You could also implement agent retries, confidence scores, or fallback behaviors if a tool fails or data is insufficient.

This section demonstrated how to build a minimal yet extensible multi-agent application using ADK. You learned how to:

-

Define agents and tools

-

Register them in a modular way

-

Coordinate task flow through an orchestrator

-

Run and verify output from a full system

6. Advanced Orchestration Strategies in ADK

As your multi-agent systems become more complex, the role of the orchestrator shifts from being a simple task dispatcher to a powerful reasoning engine. Advanced orchestration strategies enable agents to dynamically collaborate, reason recursively, and adapt to changing task flows — all key to unlocking the true potential of autonomous AI systems.

In this section, we’ll cover:

-

Recursive orchestration and planning

-

Dynamic agent spawning

-

Cross-agent communication

-

Memory and context sharing

-

Error handling and fallback strategies

1. Recursive Orchestration and Planning

Recursive orchestration means the orchestrator can break down tasks into subtasks, loop through iterations, and even assign outputs of one agent back into the system for further analysis.

Example: Recursive Question Refinement

Suppose a user asks, “How can we reduce carbon emissions in urban areas?” The orchestrator can:

-

Break the task into subtasks (transportation, housing, industry)

-

Assign subtasks to specialized agents

-

Recombine the responses

-

Run a final synthesis

Recursive patterns are useful in:

-

Research assistants

-

Problem-solving agents

-

Task planners (e.g., GPT-4 planning agents)

ADK allows recursion via orchestrator functions that call themselves or re-queue tasks dynamically.

2. Dynamic Agent Spawning

Rather than preloading all agents at runtime, ADK allows you to instantiate agents on demand based on task type, load, or contextual needs.

Why this matters:

-

Scalability: Only use compute when needed

-

Modularity: Add/remove capabilities without changing the orchestrator logic

-

Personalization: Spawn agents tailored to specific users or domains

Example:

You can register agent classes in a factory pattern and spawn them dynamically:

3. Cross-Agent Communication

True collaboration requires agents to talk to each other — not just via the orchestrator. In ADK, agents can exchange messages, share memory, or broadcast updates.

There are three ways to facilitate this:

-

Shared blackboard: Agents read/write from a shared state

-

Direct messaging: Agents push data to specific peers

-

Event bus: Publish-subscribe model where agents react to triggers

Example (Shared Context):

With more complex coordination, you may implement message-passing protocols or intermediate memory buffers.

4. Memory and Context Sharing

Many multi-agent workflows benefit from short-term memory (for a single task session) and long-term memory (persistent knowledge).

In ADK, you can implement memory as:

-

Python dictionaries

-

Vector stores (e.g., FAISS, Weaviate)

-

Database-backed stores

-

Context managers passed across agents

Example:

Long-term memory allows agents to refer back to previous knowledge or results from past sessions.

5. Error Handling and Fallback Agents

What happens when an agent fails? In production, graceful failure handling is critical.

Strategies include:

-

Retry logic: Retry the agent with same or modified input

-

Fallback agent: Use a backup agent with simpler logic

-

Human-in-the-loop: Escalate the task to a person

-

Logging and traceability: Track where and why failure occurred

Example fallback logic:

You can also include a retry queue or priority-based scheduler for more resilience.

6. Chaining and Conditional Execution

You might want to chain agents conditionally — only running the next agent if the prior output meets certain criteria.

This kind of logic supports:

-

Confidence-based pipelines

-

Decision trees

-

Adaptive workflows (e.g., exploration vs. exploitation)

7. Using Planning Agents Inside Orchestration

One powerful orchestration design is using a large language model as the planner agent inside the orchestrator. This agent receives a goal, plans the steps, and tells the orchestrator which agent to call and when.

This is popular in:

-

LangGraph-style planners

-

OpenAgents / AutoGPT frameworks

-

Google’s own ADK templates

Example planner prompt:

“Given the user goal ‘create a market report’, determine the agents required and their order of execution.”

The response could be parsed and executed as a DAG (directed acyclic graph) of agent calls.

Advanced orchestration in ADK moves you from basic task routing to intelligent, adaptive multi-agent coordination. You learned how to:

-

Break down tasks recursively

-

Dynamically spawn and assign agents

-

Enable agent-to-agent communication

-

Use memory for richer context

-

Handle failures and retries gracefully

-

Create planner-led orchestration flows

These orchestration strategies are what power real-world agentic systems used in research assistants, autonomous software teams, and decision-making bots.

7. Tool Use in Multi-Agent Systems with ADK

A powerful agent isn’t limited to just language understanding — it can use tools to search, calculate, interact with databases, call APIs, and even run code. Tool use transforms agents from passive responders into active problem-solvers capable of real-world action.

This section covers:

-

What are tools in agent systems?

-

Common types of tools (search, calculator, APIs, DBs)

-

How ADK enables tool integration

-

Building custom tools

-

Tool routing and selection

-

Chaining tools with agents

1. What Are Tools in Agent Systems?

In the context of AI agents, tools are external functions or services that an agent can call to enhance its abilities. Tools let agents:

-

Look up information (e.g., Google Search)

-

Perform calculations (e.g., math solvers)

-

Retrieve structured data (e.g., databases or vector stores)

-

Trigger real-world actions (e.g., sending an email or booking a meeting)

For example:

“What’s the weather in London tomorrow?”

A language model cannot answer this from its pre-trained knowledge alone. It must invoke a weather API — that’s tool use.

2. Common Tool Types

Here are examples of tools that multi-agent systems often use:

Tool Type | Example Use Case |

Web Search | Current events, fact-checking, external info |

Math Solver | Budgeting, engineering, or analytics tasks |

APIs | Email, CRMs, ERPs, messaging platforms |

Database Query | Inventory lookup, document retrieval |

Code Execution | Running Python scripts, simulations |

Browser | Navigating dynamic websites |

Vector Store | Semantic document search |

Each agent may have access to specific tools, or a centralized tool hub may serve them all.

3. How ADK Enables Tool Integration

Google’s Agent Development Kit allows tool usage via its Tool abstraction. You can:

-

Define tools as callable Python functions

-

Attach them to specific agents or orchestrators

-

Pass structured input/output schemas

-

Chain tool responses back into agents

Example:

4. Building Custom Tools

You’re not limited to predefined tools — you can build tools for any external system.

Example: CRM tool

Wrap this as a tool in ADK and register it with the agent.

Tip: Always describe the tool clearly to the LLM so it knows when to use it.

5. Tool Routing and Selection

In more advanced setups, the orchestrator or a planning agent decides which tool to call based on user input.

Approaches include:

-

Pattern matching or keyword detection

-

Embedding similarity to find matching tool descriptions

-

Using an LLM to select the tool (“Given this task, which tool should I use?”)

Example:

Or use an agent planner to decide:

6. Chaining Tools with Agents

Sometimes, tools are not used in isolation. Agents may chain tool outputs through reasoning steps.

Example: “What’s the average stock price of Microsoft in the last 30 days?”

Steps:

-

Use finance API tool to get stock prices

-

Use Python tool to calculate the average

-

Return the result via agent

This enables agents to reason across multiple domains, tools, and even data formats.

7. Example: Tool-Using Agent in ADK

Let’s say your agent has access to:

-

Google Search tool

-

Wikipedia tool

-

Calculator tool

Now give it a prompt:

“How tall is Mount Everest in feet minus the height of Burj Khalifa in meters?”

The agent would:

-

Use the Search tool to find Everest’s height

-

Use Wikipedia tool to find Burj Khalifa’s height

-

Use the Calculator tool to subtract and convert units

-

Output the answer

This is zero-shot tool reasoning — powered by ADK’s seamless integration with LangChain and external APIs.

Tool use turns passive LLM agents into interactive, dynamic problem solvers. With the Google ADK, tool use becomes:

-

Declarative: Easily define tools in Python

-

Modular: Attach tools to any agent

-

Scalable: Add tools without changing agent logic

-

Intelligent: Use LLMs to choose or chain tools based on task

Next, we’ll dive into how to integrate ADK agents into real-world applications — from websites and Slack bots to internal enterprise platforms.

8. Integrating ADK Agents into Real-World Applications

One of the most compelling advantages of building agents using Google’s Agent Development Kit is how easily they can be embedded into real-world systems. Whether you’re integrating them into internal dashboards, public-facing apps, messaging tools, or mobile platforms — ADK provides all the building blocks.

In this section, we’ll cover:

-

Real-world use cases for ADK agents

-

ADK + LangChain integration in app workflows

-

Integrating agents into web apps, Slack bots, CRMs, and more

-

Deployment best practices for stability and speed

-

Security and authentication considerations

1. Common Use Cases of ADK in Applications

Here’s where people are embedding ADK-powered agents today:

Domain | Agent Role |

Customer Support | AI assistant in web chat or helpdesk, triaging user issues |

Internal Dashboards | Knowledge assistant for enterprise documentation |

Developer Tools | Coding assistant or API auto-doc explainer |

Slack Bots | Workflow automation, meeting recap, or internal Q&A |

Productivity Apps | Task agents for reminders, scheduling, or summarizing docs |

Sales/CRM | Lead qualification assistant based on CRM data |

Healthcare | Agent that finds documents or preps patient intake |

ADK makes it easy to connect agents to both structured and unstructured data, and to integrate them directly into end-user interfaces.

2. LangChain + ADK in App Workflows

LangChain and ADK work well together for building modular, composable applications.

A typical workflow looks like this:

-

Frontend Input: User enters a prompt via a form, chatbox, or voice

-

Request Processing: Sent to a backend API powered by a Flask/FastAPI app

-

Agent Engine: Agent chain is executed using LangChain + ADK + tools

-

Response Return: Output is formatted and returned to the frontend

You can package this behind a REST API, a WebSocket for real-time interactions, or even embed it inside mobile apps.

3. Integrating Agents into Web Apps

The simplest way to integrate an ADK agent into your web app:

-

Step 1: Expose a REST API endpoint in Python using FastAPI

-

Step 2: Add the agent logic within that endpoint

-

Step 3: Call that API from your frontend (React, Vue, etc.)

This structure can power any kind of conversational UI or agent-backed tool. Use this approach in dashboards, admin portals, or even SaaS products.

4. Slack, Discord, and Messaging Integrations

Want your agents to live inside messaging tools?

Here’s what you need:

-

A bot token for Slack or Discord

-

A webhook that listens for messages

-

Message content is sent to the ADK agent

-

Reply is posted back via the bot

For Slack:

Agents can manage calendar events, pull documents, or respond to user queries — directly in chat.

5. Embedding into Enterprise Workflows

In enterprise contexts, agents may work behind the scenes, processing:

-

Internal documents via retrieval-augmented generation (RAG)

-

CRM entries (e.g., Salesforce, HubSpot)

-

Ticketing systems (e.g., Jira, Zendesk)

-

Databases or vector stores (Pinecone, FAISS, Weaviate)

This means your agents are not just answering questions — they’re doing real work across backend systems.

Example: Agent reads a sales meeting transcript → fetches the client record from HubSpot → drafts follow-up email.

6. Deployment Best Practices

When integrating agents in production apps:

-

Use async execution: Avoid blocking main threads during LLM calls

-

Set timeouts: Prevent infinite wait times due to network/API lags

-

Retry on failure: Retry logic for rate limits or transient failures

-

Use caching: For repeated inputs (e.g., LRU or Redis-based caching)

-

Use batch mode: If processing multiple tasks for efficiency

Agents should be stateless by default, with optional user session handling for memory.

7. Security and Auth

If your agents access sensitive data or systems:

-

Authenticate every API request with JWT or OAuth

-

Role-based access control (RBAC) on actions/tools

-

Audit logs for tool invocations

-

Input sanitization to prevent prompt injection

-

Encryption for data in transit and at rest

You can also limit tools per agent to prevent misuse — e.g., only allow data fetch, not data write.

8. Deployment Options

Here’s where you can host your ADK-based agents:

Platform | Benefits |

GCP (Vertex) | Seamless integration with Google ecosystem |

AWS Lambda | Serverless with auto-scaling |

Render/Heroku | Quick and simple deploy for MVPs |

Docker + Kubernetes | Production-grade scalability and control |

You can containerize your LangChain + ADK logic using Docker and deploy to any environment.

Integrating ADK agents into real-world applications lets you:

-

Turn any user interface into an intelligent assistant

-

Bring AI to chat, voice, CRM, or admin workflows

-

Scale across departments, clients, or users

-

Retain full control over logic, data, and behavior

In the next section, we’ll explore how to evaluate agent performance, debug them, and monitor their behavior to ensure they operate reliably and safely.

9. Debugging, Monitoring, and Evaluating Agents in ADK

Building smart agents is one part of the job — ensuring they work consistently and improve over time is another. With ADK and LangChain, you have access to tools and best practices that help you:

-

Debug broken agent chains or tool calls

-

Monitor agent usage and performance

-

Log detailed traces of reasoning steps

-

Evaluate agent effectiveness using qualitative and quantitative metrics

-

Implement continuous improvement workflows

This section will cover both development-time debugging and production-grade monitoring systems.

1. Why Debugging Agents Is Different

Unlike traditional code, agent behavior is dynamic. It’s influenced by:

-

Prompt wording

-

LLM temperature and model version

-

Retrieved documents or tools available

-

User input context

-

Agent memory (if enabled)

This means failures may not be deterministic — you’ll need a flexible debugging workflow to trace behavior over time.

2. Enable Tracing and Verbose Logging

LangChain natively supports verbose mode and detailed tracing:

This prints every step the agent takes:

-

What the LLM was thinking

-

Which tool it used

-

What response it received

-

Final output

For structured tracing, you can use:

-

LangSmith: Logs agent chains, tokens used, latency, and outcomes

-

WandB (Weights & Biases): For more custom observability

-

OpenTelemetry: If you want enterprise-grade tracing into your existing observability stack

3. Monitoring Agent Performance in Production

In production systems, you’ll want to collect:

-

Number of requests per agent/tool

-

Average response time

-

Success vs failure rates

-

Tool execution errors

-

User satisfaction (via rating or thumbs up/down)

-

Prompt-token usage and cost tracking

This helps you answer:

-

Are agents getting slower over time?

-

Is any tool failing silently?

-

Are users getting accurate answers?

Grafana, Prometheus, or Cloud Monitoring (GCP) can be used to build dashboards showing these metrics.

4. Using LangSmith for Deep Evaluation

LangSmith is LangChain’s observability and evaluation tool.

With LangSmith, you can:

-

Re-run chains on historical inputs

-

Compare behavior across LLM versions

-

Identify hallucinations or broken logic

-

Score outputs against ground truth

-

Add human feedback loops

LangSmith is ideal for debugging tools like multi-step agents, vector retrievers, or tool-calling agents.

5. Adding Custom Logs and Prompts

To better track reasoning, you can instrument your own logs inside custom tools:

Also, break prompts into parts with clear instruction blocks, e.g.:

This structured prompting improves observability and maintainability.

6. Handling Failures Gracefully

Agents can fail for several reasons:

-

Timeout from LLM or tools

-

Tool not found or unavailable

-

Invalid input format

-

Unexpected API responses

-

Hallucination of non-existent tools or entities

To handle these:

-

Wrap agent execution in try-except blocks

-

Return fallback responses (“I couldn’t find that”)

-

Log all exceptions for analysis

-

Add retry logic (e.g., exponential backoff for API failures)

-

Build a “safe mode” with limited capabilities

Agents should never crash your app. Always design with fault tolerance in mind.

7. Evaluating Agent Outputs

You can measure performance in three ways:

Method | Example |

Automated scoring | Compare to a gold output with semantic similarity (BLEU, ROUGE, cosine) |

Human rating | Users rate the helpfulness of answers (1–5 stars) |

Task-based metrics | Track completion of specific goals (e.g., booking success rate) |

LangChain supports evaluation pipelines, and LangSmith lets you benchmark chains across datasets.

8. Test with Synthetic or Recorded Inputs

Create a test set of prompts across use cases, like:

-

“What’s the balance sheet of Tesla?”

-

“Summarize the last 3 board meetings.”

-

“Explain quantum computing to a 10-year-old.”

-

“Schedule a call between 3 PM and 5 PM next Thursday.”

Feed them to your agents and evaluate their outputs. Over time, update this set to cover edge cases and new features.

9. Prompt Versioning and LLM Change Management

LLMs are evolving — their behavior can shift between model versions.

To mitigate regressions:

-

Version your prompts (v1, v2, etc.)

-

Keep test sets and historical logs

-

A/B test new prompts or tools before rollout

-

Validate changes across different models (e.g., GPT-4 vs Claude)

-

Create changelogs for prompt or tool changes

Think of agent prompts as software logic — version control is essential.

10. Logging Tool Latency and Reliability

If you have custom tools (APIs, search engines, databases), track:

-

Response times

-

Timeout frequency

-

Error rates (500s, 403s, etc.)

-

Cache hits vs misses

Poor tool performance can lead to poor agent behavior, even if the LLM is fine.

Debugging and evaluating agents built with ADK and LangChain requires a mindset shift:

-

Treat agents like dynamic systems, not static code

-

Log every step for transparency

-

Monitor tools and LLM interactions

-

Use human feedback and test datasets

-

Embrace prompt versioning and continuous improvement

10. Fine-Tuning and Optimizing ADK Agents for Performance and Cost

As your agent-based applications scale, you’ll start noticing areas where you can reduce cost, increase performance, and improve user experience. This is where fine-tuning and optimization come in.

While Google’s Agent Development Kit (ADK) doesn’t require fine-tuning to function well (it works great out of the box with foundation models), customizing certain aspects of your agents and architecture can lead to big gains — especially when you’re dealing with production workloads.

This section covers both model-level fine-tuning (for accuracy) and system-level optimizations (for speed and cost savings).

1. Why Optimize Your Agents?

Optimizing isn’t just about shaving off milliseconds. It directly impacts:

-

Response speed – Users drop off if agents feel slow

-

Inference cost – Especially when using premium LLMs like GPT-4

-

Token usage – Larger contexts = higher costs

-

Accuracy – More relevant answers, fewer hallucinations

-

Scalability – Handle more users with fewer resources

2. System-Level Optimizations (Before Fine-Tuning)

Before diving into fine-tuning LLMs, you can often get better performance just by tweaking your architecture.

a. Use Lower-Cost LLMs for Simpler Tasks

Not every task needs GPT-4. Use:

-

Claude Instant or GPT-3.5 Turbo for routine queries

-

Gemini Pro 1.5 for balanced performance

-

Mixtral or Mistral 7B for on-device tasks (via Ollama)

Split logic into chains where “cheap” models do classification, routing, or summarization, and only escalate complex tasks to more expensive models.

b. Reduce Prompt Size and Memory Footprint

Large prompts = high cost. To cut tokens:

-

Trim redundant instructions

-

Don’t repeat user messages

-

Use compact output formats

-

Disable memory or truncate it smartly (e.g., last 3 interactions only)

-

Compress retrieved documents (summarize before inject)

Example:

c. Use Streaming for Faster Responses

Let users see the answer as it’s being generated:

This reduces perceived latency even if the backend is the same.

d. Cache LLM Responses and Tool Results

Use LangChain’s inbuilt caching or Redis/MongoDB-based cache:

Cache results of deterministic inputs (like summaries or explanations), especially for repeated prompts.

3. Fine-Tuning LLMs for Domain-Specific Accuracy

While most ADK projects won’t need model fine-tuning, some use cases benefit significantly:

-

Medical diagnosis assistants

-

Legal contract summarizers

-

Financial analysis agents

-

Technical support agents with product-specific knowledge

Fine-tuning can help with tone, style, format, or domain-specific terminology.

Steps to fine-tune:

-

Collect ~500–5,000 high-quality input/output examples

-

Format them as JSONL (input: prompt, output: ideal completion)

-

Use Google Vertex AI, OpenAI Fine-Tuning, or HuggingFace Trainer

-

Evaluate vs base model with accuracy tests

-

Deploy with fallback to original model if fine-tuned one fails

Tip: Fine-tuning is best when prompt engineering and RAG no longer get you where you need to be.

4. Prompt Engineering as an Alternative to Fine-Tuning

Sometimes you don’t need to train a new model — you just need a better prompt.

Prompt tricks that improve performance:

-

Chain-of-thought: “Let’s think step-by-step…”

-

Format instructions: “Respond in this format: A | B | C”

-

Role-based context: “You are a financial planner…”

-

Constraints: “Limit to 50 words. Use bullet points.”

Example for a summarizer agent:

5. Optimizing Tool Usage

Agents calling tools often consume more compute than expected. Best practices:

-

Debounce frequent API calls

-

Pre-fetch static data (like holidays or exchange rates)

-

Use async execution if tools run in parallel

-

Group tasks — reduce steps if possible (e.g., don’t search, then summarize; just search and summarize in one go)

Also consider limiting the tools available to agents to avoid wasteful behavior.

6. Cost Management Strategies

If you’re deploying at scale, keep an eye on usage-based pricing.

-

Token budgeting: Set per-agent or per-user token limits

-

Quota tiers: Offer basic and pro usage plans

-

LLM cost tracking: Use LangSmith, OpenAI usage APIs, or billing dashboards

-

Model switching: Use cheaper models during low-priority hours or for non-critical tasks

7. Monitoring the Impact of Optimizations

Don’t just optimize — measure the impact:

-

Token usage before and after

-

Latency reduction

-

Accuracy changes (manual or automatic)

-

Cost savings per 1,000 users

-

Drop-off rate (how many users leave before completion)

Visualization tools like Grafana, LangSmith dashboards, or Google Cloud Monitoring help with this.

8. A/B Testing Prompts or Models

Always test changes before rolling them out to all users.

You can:

-

Compare two prompt versions

-

Test GPT-3.5 vs Claude vs Gemini

-

Try zero-shot vs few-shot prompts

-

Log which version performs better (using human ratings or task success rates)

Over time, you’ll build a library of “proven prompts” and “best-performing agents.”

Optimizing ADK agents is a mix of architectural design, smart prompting, and occasional fine-tuning. You don’t need to jump to training custom models right away — in most cases, streamlining your system will get you 80% of the way there.

11. Real-World Use Cases, Templates, and Multi-Agent Patterns

Now that we’ve covered how to build, optimize, and manage agents using Google’s Agent Development Kit (ADK), let’s look at how these principles come to life in real-world scenarios. Whether you’re developing an internal enterprise assistant or building a commercial SaaS product, you’ll find proven templates and multi-agent patterns that can fast-track development.

1. Real-World Use Cases of ADK-Based Agents

a. Customer Support Automation

Use Case: AI agents that classify, triage, and resolve customer queries via chat, email, or ticketing systems.

-

Classification Agent: Detects topic (billing, tech support, returns)

-

Information Agent: Retrieves relevant FAQ or order info

-

Resolution Agent: Suggests steps, triggers backend workflows (e.g., refund, reset password)

Agent Type: Multi-agent workflow with tool usage and memory

Integration: Zendesk, Intercom, HubSpot, Google Dialogflow

b. Sales Enablement & Lead Qualification

Use Case: Inbound lead triage and follow-up using AI agents

-

Qualification Agent: Parses lead info, assigns score

-

Follow-up Agent: Personalizes emails or messages

-

CRM Agent: Logs info to HubSpot, Salesforce, or Notion

Agent Type: Reactive, tool-augmented with context memory

Tools: LLM + Google Sheets + Email API + CRM

c. Healthcare Triage Assistant

Use Case: Initial patient triage before scheduling or escalation

-

Symptom Checker Agent: Gathers and classifies symptoms

-

Medical Query Agent: Queries clinical knowledge base

-

Referral Agent: Suggests doctor/specialist, books appointment

Agent Type: Goal-driven, chain of reasoning, RAG + tools

Compliance: Requires HIPAA-compliant infra (Vertex AI, LangChain with Google Cloud)

d. AI Tutors and Educational Coaches

Use Case: Personalized education tools for students

-

Explainer Agent: Breaks down complex concepts

-

Practice Agent: Generates quizzes/tests

-

Mentor Agent: Tracks progress, recommends resources

Agent Type: Agent group with memory, tool usage (search, media, API)

Tools: Gemini 1.5, YouTube API, Google Docs

e. Enterprise Knowledge Management

Use Case: Knowledge agents for internal Q&A in large companies

-

Retriever Agent: Pulls data from internal wiki, docs, tickets

-

Answer Agent: Summarizes or generates answer

-

Validator Agent: Flags outdated info or hallucinations

Integration: Google Drive, Confluence, Notion, Microsoft SharePoint

Security: OAuth + Role-based access

2. Common Multi-Agent Patterns

As your application scales, a single agent often won’t cut it. Multi-agent patterns allow you to divide responsibilities across smaller, composable agents.

Here are the most widely used ones:

a. Sequential Chain (Assembly Line)

Each agent hands off to the next. Example:

Input → Classifier Agent → Retriever Agent → Summarizer Agent → Formatter Agent → Output

Use this when tasks are distinct and don’t require collaboration.

b. Planner + Executor

-

Planner Agent: Determines tasks needed to reach goal

-

Executor Agents: Handle one task each (e.g., search, email, code)

This is good for multi-step goals like “create a presentation” or “summarize 10 PDFs and email the summary.”

You can implement using LangGraph’s planner/executor pattern or via ADK’s agent_executor.

c. Negotiating Agents (Swarm AI)

Multiple agents evaluate a situation and vote/agree on the best approach.

-

Used in legal decisions, risk assessments, or product recommendations

-

Agents can specialize in different domains (finance, legal, ethics)

d. Critic and Refiner Loop

One agent generates output; another reviews and improves it.

-

Example: Writer Agent → Critic Agent → Rewrite Agent

-

You can add a final human-in-the-loop if needed

Helpful for tasks like email writing, long-form reports, or summarization.

e. Role-Based Collaboration (Teams)

Assign different roles with responsibilities:

-

Researcher Agent → Fact-checker Agent → Presenter Agent

-

Each agent accesses different tools, knowledge bases, or personas

Mimics human teamwork with delegation.

3. Starter Templates (LangChain + ADK + LangGraph)

Below are plug-and-play templates you can use to start faster.

a. RAG-Based QA System

b. Planner + Executor Agent Chain

c. Memory-Enabled Support Bot

In enterprise settings, you may need to implement:

-

Auth with OAuth2 or Firebase Auth

-

RAG with encrypted data

-

Audit logs for agent behavior

-

Data masking for sensitive inputs/outputs

Always check regulatory requirements (e.g., HIPAA, GDPR, SOC2) if deploying in regulated industries.

5. Best Practices from Successful ADK Projects

-

Start small – One agent solving one problem well

-

Instrument early – Add logs, token tracking, and feedback

-

Version your prompts – Track how prompt tweaks affect performance

-

Maintain agent profiles – Treat agents like personas with evolving responsibilities

-

Design for failure – Add retry logic, escalation agents, and fallback flows

The power of Google’s Agent Development Kit is best realized when you use the right patterns and practices to model human-like collaboration among agents. Whether it’s a legal assistant, a technical documentation bot, or a tutoring agent, the principles remain the same: clarity of goal, clean orchestration, secure data flow, and constant evaluation.

Next, we’ll explore how to deploy and monitor your ADK-based agents in production environments, including tips for staging, A/B testing, and observability.

13. Conclusion and the Future of Agent Development with ADK

The landscape of software is rapidly evolving, and agent-based systems powered by Large Language Models (LLMs) are at the center of this transformation. Google’s Agent Development Kit (ADK) has emerged as one of the most developer-friendly, flexible, and future-ready toolkits to build these intelligent agents — not just for research, but for real-world, production-ready systems.

Let’s recap what we’ve covered and explore what’s next for developers, businesses, and the broader AI ecosystem.

1. The Journey So Far

Throughout this guide, we’ve explored the full lifecycle of building with ADK:

-

Understanding the Agent Paradigm and how it contrasts with traditional software

-

Getting Started with ADK: architecture, setup, and components

-

Defining Agent Goals and Capabilities using intent definitions, toolkits, and workflows

-

Integrating Language Models like Gemini and open-source LLMs

-

Building Multi-Agent Systems using Coordinators, Memory, and Role-based Design

-

Real-World Use Cases, from internal enterprise agents to customer-facing solutions

-

Evaluation, Monitoring, and Deployment strategies for scaling agents safely and effectively

Each section was aimed at giving you a practical and strategic foundation to not only build, but also scale agent-powered applications.

2. Why ADK Stands Out

Google’s approach to ADK is not to provide a “black-box” solution, but to give developers full visibility and control:

-

Interoperability: Easily integrates with LangChain, HuggingFace, Vertex AI, and Firebase

-

Customizability: Create custom planners, tool wrappers, evaluators, and routing logic

-

Modularity: Pick and choose components that fit your use case instead of being locked into a rigid framework

-

Security and Scale: Built with Google Cloud best practices, making it easier to achieve enterprise-grade compliance

This flexibility makes ADK suitable whether you’re building a smart internal assistant for your dev team or launching a full-scale, multi-agent customer service platform.

3. What the Future Holds

The future of agents is collaborative, persistent, and embodied. Here’s what’s coming next in the world of agentic systems — and how ADK is positioned to support it.

a. Multi-modal Agents

The next-gen agents will go beyond text and voice. With Gemini 1.5’s native support for video, images, and documents, you’ll soon build agents that:

-

Watch a video and summarize its legal implications

-

View photos of machinery and suggest repairs

-

Read receipts and auto-file expense reports

b. Agents with Memory and Long-term Goals

ADK’s experimental support for memory modules will expand into persistent agents capable of:

-

Remembering long-term user preferences

-

Keeping track of ongoing projects or business workflows

-

Acting autonomously over days or weeks, not just session-by-session

c. Agent Ecosystems

Think of ADK not just for individual bots, but for ecosystems of agents:

-

A sales agent that works with a marketing planner and a lead qualifier

-

A healthcare intake agent that coordinates with appointment schedulers and diagnostic tools

-

A procurement assistant that negotiates with multiple vendor agents autonomously

This is where multi-agent coordination and cooperative problem-solving will shine — and ADK is already laying the groundwork.

d. Custom LLMs + Private RAG Systems

We’ll see more companies training their own models or building fine-tuned agents around internal data using Retrieval-Augmented Generation (RAG). ADK’s support for plug-and-play vector search makes it a natural fit for:

-

Knowledge management

-

Internal Q&A

-

Decision support systems

e. Regulatory and Ethical Frameworks

As agents take on decision-making roles in sensitive domains like healthcare, finance, and law, expect:

-

Clear guidelines on transparency and explainability

-

Mandatory human-in-the-loop frameworks

-

Auditable trails for agent behavior and training data

ADK’s logging and observability features make it easier to stay compliant.

4. Final Thoughts for Developers and Founders

Whether you’re a startup founder, a product manager, or an AI enthusiast — the time to start building with agents is now.

-

If you’re a solo builder: Start with a lightweight assistant using ADK + Gemini Flash. Focus on value, not complexity.

-

If you’re a startup: Identify one core workflow that can benefit from automation. Build a single-purpose agent and validate it.

-

If you’re an enterprise team: Use ADK to prototype internal agents before scaling to customer-facing systems.

The tooling, the models, and the ecosystem have matured to the point where you can move from idea to production in weeks, not years.

5. Your Next Steps

-

Clone the official ADK template repo from Google GitHub

-

Choose a domain you’re familiar with and define the agent’s job-to-be-done

-

Set up telemetry, feedback loops, and a safe testing environment

-

Keep iterating — agents improve as you improve their tooling, prompts, and context

ADK represents a new era of development: systems that don’t just respond, but reason, collaborate, and adapt. This guide should give you the clarity to get started — now it’s your move.

Back to You!

If you’re thinking about creating a smart assistant or automating tasks using Google’s Agent Development Kit, we’re here to help. As a leading AI development company, Aalpha works with businesses to build powerful, custom AI agents that actually get the job done — built right, built to scale. Contact us and get started today!

Share This Article:

Written by:

Stuti Dhruv

Stuti Dhruv is a Senior Consultant at Aalpha Information Systems, specializing in pre-sales and advising clients on the latest technology trends. With years of experience in the IT industry, she helps businesses harness the power of technology for growth and success.

Stuti Dhruv is a Senior Consultant at Aalpha Information Systems, specializing in pre-sales and advising clients on the latest technology trends. With years of experience in the IT industry, she helps businesses harness the power of technology for growth and success.